- CPA to Cybersecurity

- Posts

- Daily "Go Use It" AI Practice

Daily "Go Use It" AI Practice

5 More 1 Minute Fabric Pattern Demos

Table of Contents

Fabric patterns - “go use it” daily practice

“I think AI is the biggest change in our world since the invention of electricity, a bigger change than the Internet. If you're not using it for half an hour a day you don't understand. You need to put it on your calendar and go use it.”

With Seth’s wisdom from the above video now extracted, let’s jump into five more fabric patterns to discover new capabilities to augment life and work.

Previous Demos

Some previous demos are here:

#13 create_markmap_visualization

About and Demo

Input

./yt.py https://youtu.be/iwABrs9vpp4 | fabric -p create_markmap_visualization -o GRC_Plan_markmap.txtOutput

Terminal

Text File

markmap:

colorFreezeLevel: 2

---

# Breaking into Cybersecurity GRC

## Overview

- GRC: Governance, Risk, and Compliance

- Goal: Provide steps to enter the cybersecurity GRC field

## Growth Strategy: 70-20-10 Model

- 70% Experience

- Hands-on projects

- Stretch assignments

- Job shadowing

- 20% Relationships

- Mentoring (coach, sponsor, connector)

- Networking

- Reverse mentoring

- 10% Education

- Certifications (CISA, CISSP, CISM)

- Online courses

- Cybersecurity frameworks

## Timeless Lessons

- Fast and Furious Franchise

- Importance of hands-on experience

- Ray Dalio's Principles

- Radical candor and transparency

- Growth through feedback and iteration

## GRC Service Catalog

- Risk register

- Third-party infosec due diligence

- Policy standards and directives

- Security awareness campaigns

## Steps to Break into GRC

1. Determine "From-To"

- Identify target job and required skills

2. Inventory Skills

- Use job postings and resources like CyberSeek

3. Set Goals

- Education, experience, and relationships goals

## Resources Mentioned

- CyberSeek

- Cyber Path Mind Map

- Darknet Diaries Episode 60

## Key Takeaways

- Degrees are less of a barrier in cybersecurity

- Focus on gaining relevant skills and building relationships

- Continuous learning and adaptation are crucial

---

VISUAL EXPLANATION:

- Simplified the transcript into key sections for clarity.

- Highlighted the 70-20-10 growth model as a central theme.

- Included lessons from external references for broader insights.

- Outlined the GRC service catalog to show the field's scope.

- Broke down the steps to enter GRC for actionable guidance.

- Listed resources for further exploration and learning.

- Concluded with takeaways emphasizing skills and relationships.Clickable Mindmap html file - Cool!

#14 create_keynote (with YouTube helper)

About and Demo

Instead of manually scraping the transcript of YouTube videos as an input to fabric (via the pbpaste command), in this demo I use the YouTube video URL as the input. From there the Fabric YT Helper does the scraping work.

Input

./yt.py https://youtu.be/m4YykHZUrOY | fabric -p create_keynote -o GRC_keynote.txtOutput

Text File

## FLOW

1. Introduction to GRC and interest on Reddit

2. The power of community in exploring career paths

3. What is GRC? A brief overview

4. The big question: How to break into GRC?

5. First steps towards a GRC career

6. Importance of certifications and training

7. Transitioning from different fields to GRC

8. The role of compliance in starting a GRC career

9. Elevating from compliance to risk management

10. Personal stories of transitioning into GRC

11. Critical skills for success in GRC

12. Immersing yourself in cybersecurity culture

13. Podcasts and blogs as learning tools

14. Courses and resources for breaking into GRC

15. The Cyber Path Mind Map: Finding your place in cybersecurity

16. The 70-20-10 career development plan for GRC

17. Conclusion: The journey to a GRC career starts now

## DESIRED TAKEAWAY

Breaking into GRC requires a blend of education, practical experience, and community engagement to navigate and succeed in this dynamic field.

## PRESENTATION

1. Introduction to GRC and its Reddit Impact

- GRC discussion explodes on Reddit

- 92,000 views, 231 shares, 195 upvotes

- Image description: A bustling Reddit forum with notifications popping up

- Speaker notes:

- "Let's dive into how a single post about GRC careers went viral on Reddit."

- "This shows the immense interest and potential within the field of GRC."

- "Join us as we explore why GRC is capturing so much attention."

2. The Power of Community in Career Exploration

- Community engagement brings awareness

- Newcomers welcomed into the GRC conversation

- Image description: Diverse group of people discussing around a table with a GRC sign

- Speaker notes:

- "The power of community can't be understated in exploring new career paths."

- "Engaging discussions help newcomers see if GRC is the right fit for them."

- "It's all about meaningful work and relationships."

3. What is GRC? A Brief Overview

- Governance, Risk Management, Compliance

- Ensures organizational integrity and security

- Image description: Three interconnected gears labeled Governance, Risk, Compliance

- Speaker notes:

- "GRC stands for Governance, Risk Management, and Compliance."

- "It's about ensuring that organizations operate with integrity and security."

- "A field that's both challenging and rewarding."

4. The Big Question: Breaking into GRC

- Common inquiry: How do I start?

- Foundational first steps highlighted

- Image description: A person standing at the entrance of a maze labeled "GRC Career Path"

- Speaker notes:

- "One question stands out: How do I break into GRC?"

- "Today, we'll cover some foundational steps to get you started."

- "It's about taking that first step with confidence."

5. First Steps Towards a GRC Career

- Immerse in books, podcasts, blogs

- Target a job, identify gaps

- Image description: A roadmap with signposts for Books, Podcasts, Jobs, Skills

- Speaker notes:

- "Begin by immersing yourself in the world of GRC through various mediums."

- "Identify the job you want and understand the skills you need."

- "This is your roadmap to a career in GRC."

6. Importance of Certifications and Training

- Certifications like CISA can be impactful

- Training for specific audits and standards

- Image description: A certificate with a ribbon marked "CISA"

- Speaker notes:

- "Certifications such as CISA can significantly impact your career."

- "They prepare you for specific audits and standards in the field."

- "A testament to your knowledge and skills."

7. Transitioning from Different Fields to GRC

- Engineering, manufacturing operations, IT sales

- Entry-level compliance as a starting point

- Image description: People from various professions walking towards a signpost labeled "GRC"

- Speaker notes:

- "GRC welcomes professionals from diverse fields like engineering and IT sales."

- "Starting in compliance can be a great entry point into the field."

- "It's about leveraging your existing skills in a new domain."

8. The Role of Compliance in Starting a GRC Career

- Compliance as the foundation

- Learning through renewal of past controls

- Image description: A person reviewing a checklist with past year's files on the desk

- Speaker notes:

- "Compliance serves as the foundation for many starting in GRC."

- "It involves renewing past controls and learning through practice."

- "A safe space for beginners to grow their knowledge."

9. Elevating from Compliance to Risk Management

- Transitioning to risk management after gaining experience

- Asking better questions about potential risks

- Image description: A person climbing stairs from Compliance to Risk Management

- Speaker notes:

- "With experience in compliance, you can elevate to risk management."

- "This involves asking deeper questions about what could go wrong."

- "A natural progression in your GRC career."

10. Personal Stories of Transitioning into GRC

- From IT sales to software engineer to GRC

- Continuous learning and growth

- Image description: A timeline showing a person's career path leading to GRC

- Speaker notes:

- "Let's hear stories of those who've made the transition into GRC."

- "From IT sales to software engineering, the paths are diverse."

- "A testament to continuous learning and growth."

11. Critical Skills for Success in GRC

- Critical thinking and problem-solving

- Ability to analyze, gather, present well

- Image description: A brain with gears inside, symbolizing critical thinking

- Speaker notes:

- "Critical thinking stands out as a key skill for success in GRC."

- "It's about solving complex problems and presenting solutions effectively."

- "These skills will take you far in your career."

12. Immersing Yourself in Cybersecurity Culture

- Reading vetted lists of cybersecurity books

- Understanding the mission behind cybersecurity

- Image description: A person surrounded by books with titles on cybersecurity themes

- Speaker notes:

- "Immerse yourself in cybersecurity culture through reading."

- "Books like 'The Fifth Domain' offer insights into the mission behind our work."

- "Understanding this mission can drive your passion for the field."

13. Podcasts and Blogs as Learning Tools

- Darknet Diaries, Risky.Biz for real hacking stories

- Blogs for building cybersecurity careers

- Image description: A podcast app open on a smartphone with Darknet Diaries playing

- Speaker notes:

- "Podcasts like Darknet Diaries bring real hacking stories to life."

- "Blogs offer guidance on building your cybersecurity career."

- "These resources fuel your curiosity and learning."

14. Courses and Resources for Breaking into GRC

- Recommended courses by Kip Bole and Jason Dion

- Cyber Path Mind Map for career guidance

- Image description: An online course interface with a lesson on GRC basics highlighted

- Speaker notes:

- "Courses by experts like Kip Bole offer a structured path into cybersecurity."

- "The Cyber Path Mind Map helps you find your place in this vast field."

- "Leverage these resources to guide your journey."

15. The 70-20-10 Career Development Plan for GRC

- 70% on-the-job learning, 20% relationships, 10% formal education

- Structuring development for optimal growth

- Image description: A pie chart showing the 70-20-10 distribution for career development

- Speaker notes:

- "The 70-20-10 plan offers a framework for your career development in GRC."

- "It balances on-the-job learning, networking, and formal education."

- "A proven strategy for accelerating your growth."

16. Conclusion: Your Journey Starts Now

- The best time to start is now

- Engage, learn, grow in the field of GRC

- Image description: A seedling growing from the ground with a clock showing the current time

- Speaker notes:

- "Remember, the best time to start your journey is now."

- "Engage with the community, learn continuously, and grow within GRC."

- "Your path to a rewarding career in GRC begins today."Slides

Human Generated Canva Slides, Artwork from Midjourney

About and Demo

Input

Output

Terminal

Text File

NEWSLETTER:

Context Overflow, Issue #10 - "Malicious Models on Hugging Face, Self-Replicating AI Worm, ASCII Art Jailbreak Technique, AI Threat Modeling, and more"

SUMMARY:

This week's Context Overflow explores the intersection of AI and cybersecurity, highlighting malicious AI models, innovative defense mechanisms, and the evolving landscape of AI threats.

- Insights from Ismael Valenzuela on AI threat intelligence.

- Discovery of over 100 malicious AI/ML models on Hugging Face.

- Introduction of Fickling for analyzing malicious Python pickle objects.

- ASCII art as a novel method to bypass LLM safety measures.

- The rise of zero-click worms targeting GenAI applications.

- Conditional prompt injection attacks tailored for specific users.

- Backtranslation defense against jailbreaking attacks on LLMs.

- GeoSpy's potential in revolutionizing photo geolocation.

- Comprehensive analysis of threat models in AI applications.

- Discussion on the misuse of AI by threat actors.

CONTENT:

- Daniel Miessler and Ismael Valenzuela discuss the evolving threat landscape in AI security.

- Over 100 malicious AI/ML models found on Hugging Face, highlighting a new distribution channel for malware.

- Fickling introduced as a crucial tool for cybersecurity professionals dealing with Python pickle objects.

- ASCII art demonstrated as an effective method to exploit LLM vulnerabilities.

- Introduction of Morris II, a self-replicating AI worm, showcasing offensive AI use cases.

- Conditional prompt injection attacks reveal the nuanced challenges in securing LLM applications.

- Backtranslation proposed as a novel defense mechanism against LLM jailbreaking attacks.

- GeoSpy's AI-driven photo geolocation shows promise despite limitations.

- NCC Group's analysis offers a deep dive into AI application threat models.

- The Wall Street Journal reports on the dark side of AI, including its use in cybercrime.

OPINIONS & IDEAS:

- The discovery of malicious AI models on Hugging Face is reminiscent of past issues with NPM and PyPi.

- Fickling's release is timely given the surge in malicious AI/ML models.

- The simplicity and effectiveness of ASCII art in bypassing LLM safety measures are fascinating.

- The concept of Morris II highlights the potential for offensive use cases within GenAI ecosystems.

- Conditional prompt injection attacks are seen as innovative and dangerous.

- Skepticism about the effectiveness of backtranslation defense due to potential false negatives.

- GeoSpy's potential in photo geolocation is acknowledged despite current limitations.

- The comprehensive analysis by NCC Group is recommended for understanding AI in cybersecurity.

- Concerns are raised about lawmakers potentially restricting AI tools as a solution to cyber threats.

TOOLS:

- Fickling: A tool for analyzing malicious Python pickle objects. [GitHub Repo](https://github.com/trailofbits/fickling)

- GeoSpy: An AI-driven tool for photo geolocation. [No direct link provided]

COMPANIES:

- Blackberry Cylance: Insights from VP of Threat Research and Intelligence, Ismael Valenzuela. [Website](https://www.blackberry.com/us/en/products/cylance) X [Twitter](https://twitter.com/CylanceInc)

- Trail of Bits: Introduced Fickling for analyzing malicious Python pickle objects. [Website](https://www.trailofbits.com/) X [Twitter](https://twitter.com/trailofbits)

- Hugging Face: Platform where over 100 malicious AI/ML models were unearthed. [Website](https://huggingface.co/) X [Twitter](https://twitter.com/huggingface)

FOLLOW-UP:

1. Explore Fickling by Trail of Bits for cybersecurity applications: [GitHub Repo](https://github.com/trailofbits/fickling).

2. Listen to the podcast episode featuring Daniel Miessler and Ismael Valenzuela for insights on AI threat intelligence.

3. Read the detailed analysis by jFrog on malicious AI/ML models found on Hugging Face.

4. Check out the ArtPrompt paper for an innovative ASCII art jailbreak technique.

5. Dive into the paper introducing Morris II, a self-replicating AI worm, for insights into offensive AI use cases.

6. Investigate conditional prompt injection attacks and their implications for LLM security.

7. Review the backtranslation defense mechanism against jailbreaking attacks on LLMs for its potential and limitations.

8. Experiment with GeoSpy for photo geolocation to understand its capabilities and limitations.

9. Read NCC Group's comprehensive analysis of threat models in AI applications for a deeper understanding of cybersecurity challenges.

10. Consider the implications of the misuse of AI by threat actors as reported by The Wall Street Journal.#16 extract_article_wisdom

About and Demo

Comparison between extract_article_wisdom on the left and extract_wisdom on the right

Input

pbpaste | fabric -p extract_article_wisdom -o AIthreatmodels.txtOutput

This pattern distilled ~6,500 words into 610

Terminal

Text file

## SUMMARY

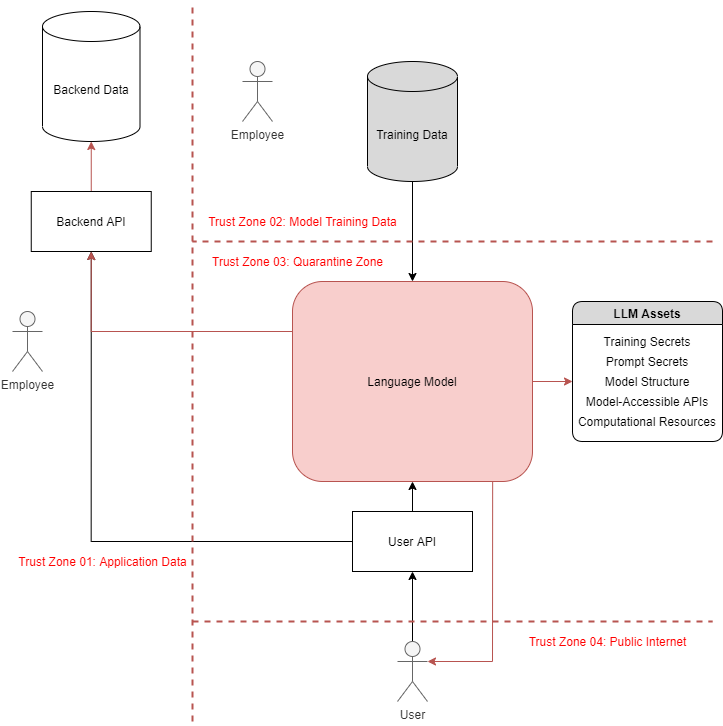

NCCDavid's analysis delves into the security implications of integrating machine learning, especially Large Language Models (LLMs), into application architectures, highlighting new threat vectors and mitigation strategies.

## IDEAS:

- Machine learning models act as assets, controls, and threat actors in application threat models.

- Novel attack vectors introduced by machine learning integration include Oracle Attacks, Entropy Injection, and Format Corruption.

- The Models-As-Threat-Actors (MATA) methodology enumerates known and novel attack vectors.

- Security controls for AI/ML-integrated applications focus on mitigating vulnerabilities at the architecture layer.

- Best practices for security teams involve validating controls in dynamic environments.

- Attack scenarios range from privileged access via language models to model asset compromise.

- Language models should be considered potential threat actors in secure architecture design.

- Common vulnerability classes in AI-enabled systems include Prompt Injection, Oracle Attacks, and Adversarial Reprogramming.

- Security controls proposed include canary nonces and watchdog models, though they are not foolproof.

- Penetration testing for AI-integrated environments benefits from open-dialogue, whitebox assessments due to the nondeterministic nature of machine learning systems.

## QUOTES:

- "Machine learning models occupy the positions of assets, controls, and threat actors within the threat model of these platforms."

- "Organizations that understand this augmented threat model can better secure the architecture of AI/ML-integrated applications."

- "Attackers who poison the model’s data source or submit malicious training data can corrupt the model itself."

- "Language models in their current state should themselves be considered potential threat actors."

- "Prompt Injection continues to be actively exploited and difficult to remediate."

- "Oracle attacks enable attackers to extract information about a target without insight into the target itself."

- "Adversarial reprogramming attacks enable threat actors to repurpose the computational power of a publicly available model."

- "Machine learning models should instead be considered potential threat actors in every architecture’s threat model."

- "Penetration testers should be provided with architecture documentation, including upstream and downstream systems that interface with the model."

- "The information security industry will have the opportunity to dive into new risks, creative security controls, and unforeseen attack vectors."

## FACTS:

- Large Language Models (LLMs) are increasingly integrated into application architectures.

- Attack vectors unique to machine learning include Oracle Attacks, Entropy Injection, and Format Corruption.

- The MATA methodology is used to enumerate attack vectors in AI/ML-integrated applications.

- Security controls for mitigating vulnerabilities focus on the architecture layer of applications.

- Dynamic environments pose challenges for validating security controls in AI/ML-integrated applications.

- Privileged access via language models can compromise backend data confidentiality or integrity.

- Language models lacking isolation between user queries can be manipulated to produce malicious outputs.

- Training data poisoning can manipulate a model’s behavior across users.

- Machine learning models may access valuable assets, making them targets for compromise.

- Penetration testing for AI-integrated environments requires detailed architecture documentation and subject matter expertise.

## REFERENCES:

- Models-As-Threat-Actors (MATA) methodology

- Microsoft Tay incident

- ChatGPT’s response rating mechanism

- Oracle Attacks

- Adversarial Reprogramming

- Model Extraction Attacks

- Adversarial Input attacks

- Format Corruption

- Parameter Smuggling

- Control Token Injection

## RECOMMENDATIONS:

- Implement security controls focusing on mitigating vulnerabilities at the architecture layer.

- Validate controls in dynamic environments as part of best practices for security teams.

- Consider language models as potential threat actors in secure architecture design.

- Employ canary nonces and watchdog models among other security controls, while acknowledging their limitations.

- Provide penetration testers with detailed architecture documentation for AI-integrated environments.

- Utilize open-dialogue, whitebox assessments for penetration testing due to the nondeterministic nature of machine learning systems.

- Adapt the architecture paradigm of systems employing machine learning models, especially LLMs, for enhanced security.

- Apply a trustless function approach to mitigate risks associated with state-controlling LLMs exposed to malicious data.

- Integrate compensating controls in environments where machine learning models assess critical conditions.

- Consider machine learning models as untrusted data sources/sinks with appropriate validation controls.#17 analyze_paper

About and Demo

Input

pbpaste | fabric -p analyze_paper -o inversion_attacks_paper.txtOutput

Terminal

Text File

SUMMARY:

This paper introduces a Variational Model Inversion (VMI) attack method for deep neural networks, significantly improving attack accuracy, sample realism, and diversity on face and chest X-ray datasets.

AUTHORS:

- Kuan-Chieh Wang

- Yan Fu

- Ke Li

- Ashish Khisti

- Richard Zemel

- Alireza Makhzani

AUTHOR ORGANIZATIONS:

- University of Toronto

- Vector Institute

- Simon Fraser University

FINDINGS:

- Introduced a probabilistic interpretation of model inversion attacks with a variational objective enhancing diversity and accuracy.

- Implemented the framework using deep normalizing flows in StyleGAN's latent space, leveraging learned representations for targeted attacks.

- Demonstrated superior performance in generating accurate and diverse attack samples on CelebA and ChestX-ray datasets compared to existing methods.

STUDY DETAILS:

The study frames model inversion attacks as a variational inference problem, utilizing a deep generative model trained on a public dataset to optimize a variational objective for improved attack performance.

STUDY QUALITY:

- Study Design: Utilized a novel variational inference framework for model inversion attacks, significantly enhancing attack capabilities.

- Sample Size: Evaluated on large-scale datasets (CelebA and ChestX-ray) ensuring robust findings.

- Confidence Intervals: Not explicitly mentioned, but results show clear improvement over baselines.

- P-value: Not provided, focus on empirical performance improvements.

- Effect Size: Demonstrated significant improvements in target attack accuracy, sample realism, and diversity.

- Consistency of Results: Consistently outperformed existing methods across different datasets and metrics.

- Data Analysis Method: Empirical evaluation using standard sample quality metrics and comparative experiments.

CONFLICTS OF INTEREST:

NONE DETECTED

RESEARCHER'S INTERPRETATION:

Confidence in the results is HIGH. The proposed VMI method shows significant improvements in model inversion attacks' effectiveness, likely to be replicated in future work given the robust evaluation across multiple datasets.

SUMMARY and RATING:

The paper introduces a highly effective method for model inversion attacks with significant improvements over existing techniques. The quality of the paper and its findings are rated as VERY HIGH.